Data modeling is the craft of turning messy, real‑world questions into data that systems can trust and people can use. This article walks through the three classic layers of that craft—conceptual, logical, and physical modeling—showing how each one tightens the focus from broad business meaning to platform‑specific DDL while ensuring the integrity, performance, and governance that the organization relies on. Along the way we will also review a fourth layer—transformational modeling—that captures the lineage and reshaping of data after it lands, completing the story from idea to insight.

Conceptual

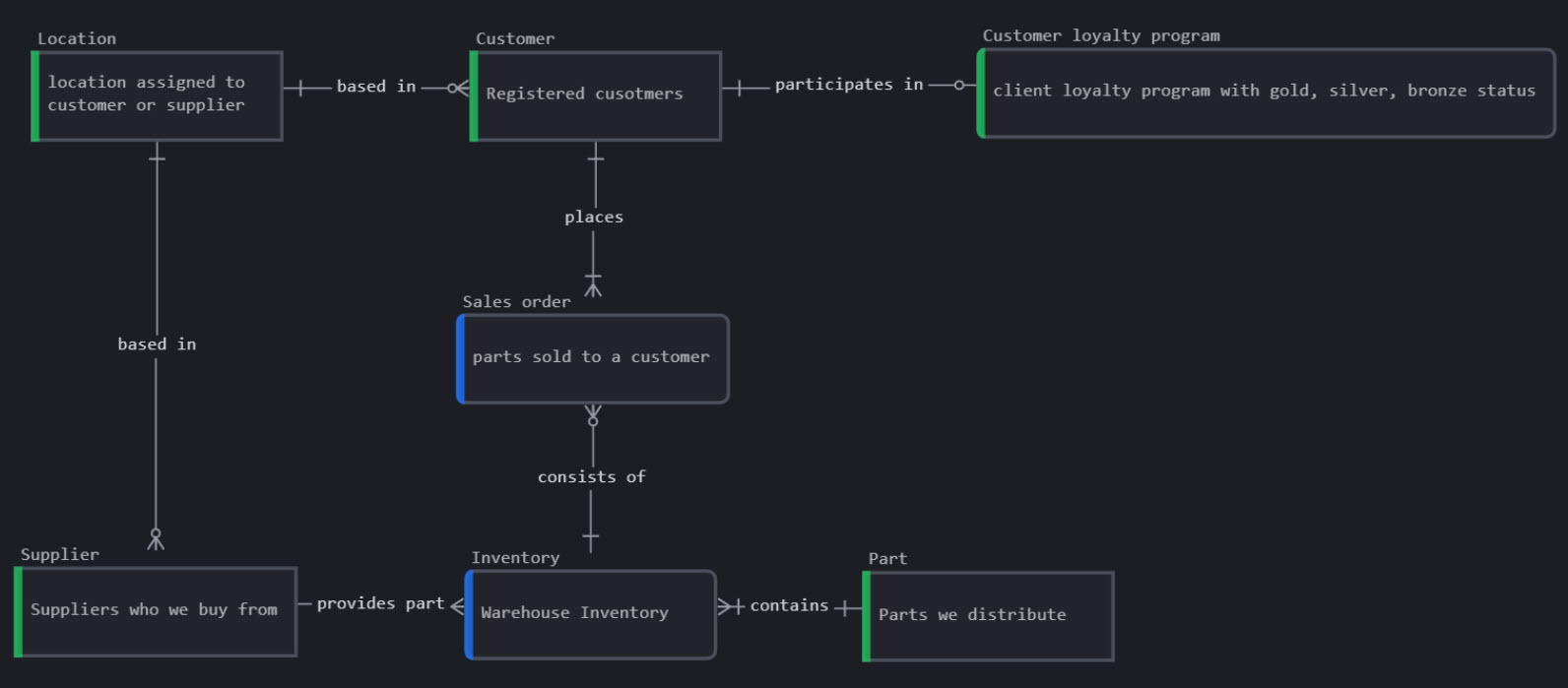

Modeling begins long before databases—or even data—enter the picture; it starts with the business itself. A conceptual diagram should be as valid for describing the organization’s operating model as it would be for laying the foundations of its database landscape.

Conceptual modeling is a process of identifying and visually mapping the moving pieces, or entities, of a business operation.

Conceptual modeling is first and foremost a business‑value exercise: it creates a shared mental model of how the organization makes money, serves customers, and measures success long before any rows are written to a database. By convening business domain experts with data architects around a visual canvas, the process forces the enterprise to name its core entities, clarify their meanings, and expose any hidden disagreements about how work actually gets done. Those “aha” conversations—when two departments realize they attach different definitions to “customer,” or when a product manager finally sees how a support ticket relates to revenue—are where real value is generated; the diagram that emerges is simply the codified outcome of that alignment.

From a technical perspective, the conceptual model still matters: it inventories entities, identifies their high‑level relationships, and provides the canonical vocabulary that logical and physical designs will later reference. Because it lives at the highest level of abstraction, it can flex in depth or visual notation to suit the audience, whether that means a simple domain chart for executives or a richer ER sketch for data stewards. Its digital form—in a collaborative modeling tool rather than on a transient whiteboard—lets teams iterate quickly, capture revisions, and reuse the artifact as a living blueprint for downstream work such as data architecture, analytics, and even application development. Thus, conceptual modeling both uncovers the business truth and supplies the technical foundation on which every subsequent design and analysis step confidently stands.

Logical

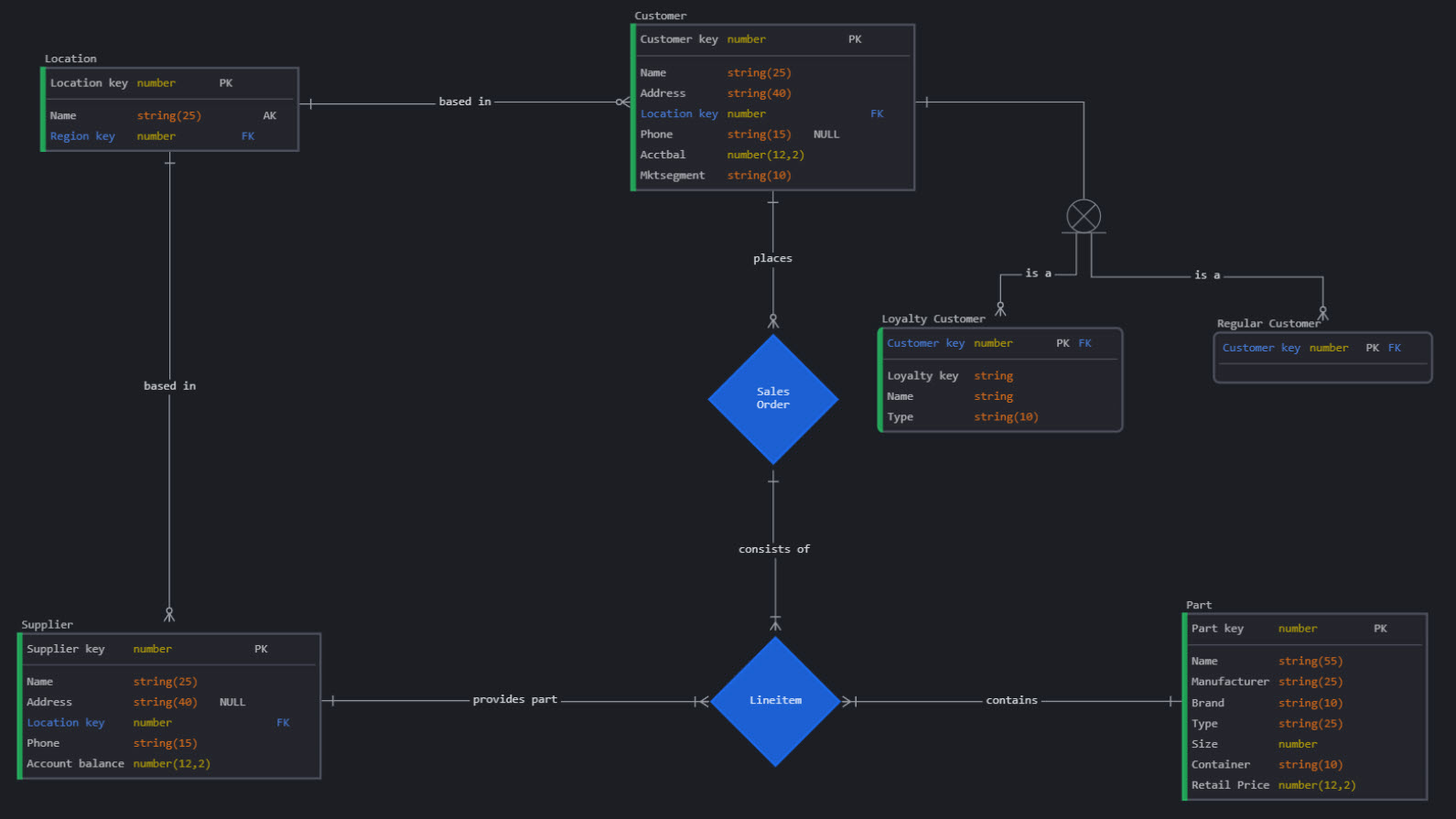

Logical modeling is the bridge between the business’s conceptual operating model and the physical structure of the database. It uses conventions that give context to the nature of the data beyond what conceptual or physical models can express.

Logical modeling converts the business insight captured in the conceptual model into an implementation‑ready blueprint that still speaks a language everyone can follow. By defining cardinalities, optionality, sub‑ and super‑type structures, unique identifiers, and preliminary data types, it makes explicit the business rules that keep the organization on solid ground: a customer must have at least one address but a phone number is optional; a “Loyalty Customer” and “Regular Customer” order are variations of the same super‑entity and therefore roll up to a single company department. Because the notation remains platform‑agnostic, the model is free from Snowflake‑ or Postgres‑specific syntax, which lets architects validate integrity constraints with stakeholders long before costly engineering work begins and ensures that every downstream system—ETL pipelines, BI tools, machine‑learning features—draws from a single, unambiguous definition of truth.

At the same time, logical modeling introduces just enough technical structure to make subsequent automation possible. Generic data types (string, integer, datetime), normalized keys, and rule annotations can be fed directly into design tools or manually transformed to generate physical DDL for any chosen database engine. This middle layer therefore saves money and time twice: first, by surfacing rule conflicts early for the business to resolve, and second, by reducing re‑work when engineers translate the design into production‑grade schemas. In short, logical modeling links business semantics to technical execution, preserving nuance that would be lost in a purely conceptual sketch and preventing the lock‑in that comes with an immediate leap to physical tables.

Physical

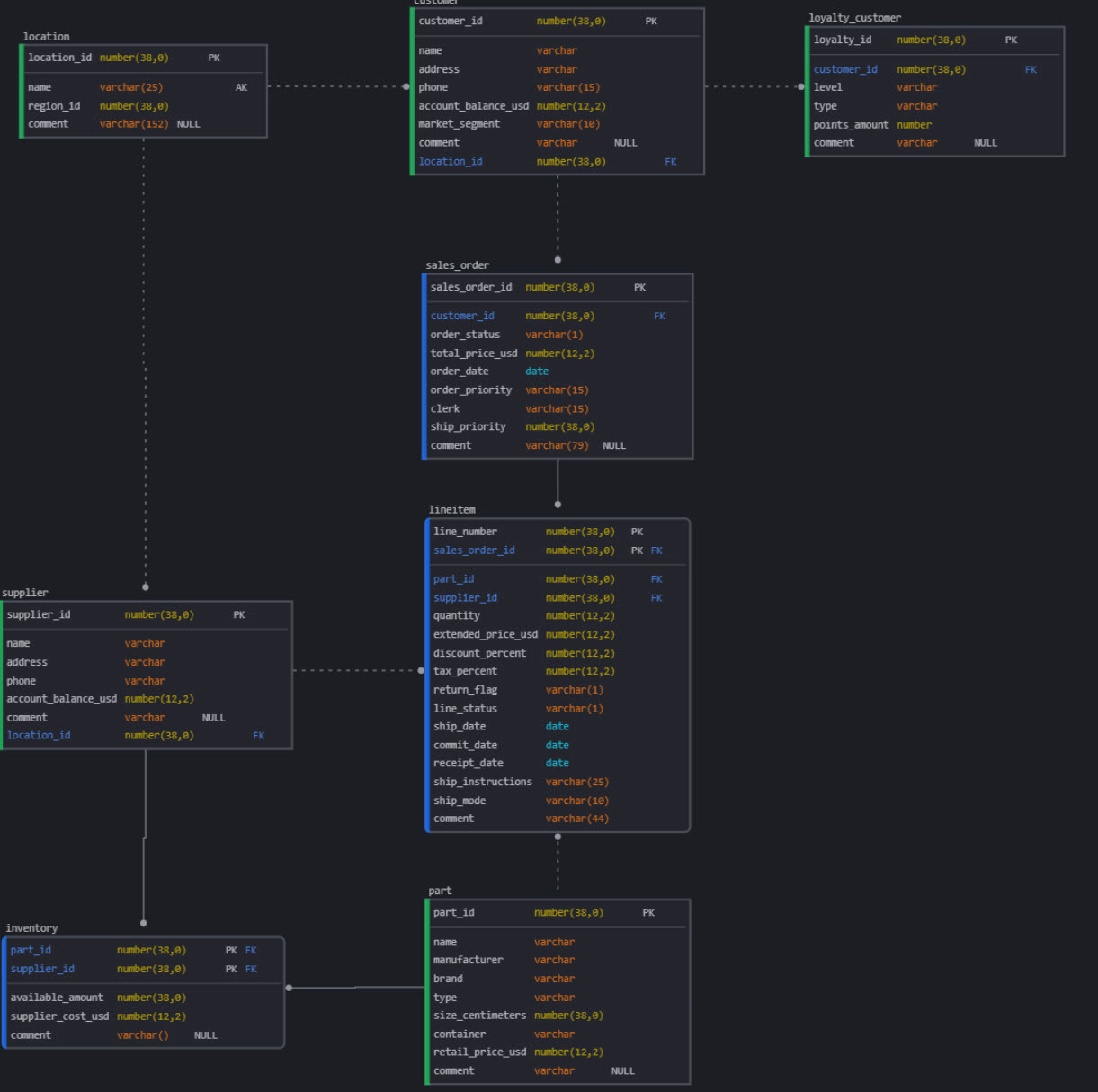

When we descend one more level to the physical model, abstraction gives way to concrete reality and turns those rules into Snowflake objects. Here, each entity becomes a table or view named exactly as Snowflake expects; every generic “string” or “integer” in the logical diagram is restated as VARCHAR(16777216) or NUMBER(38,0) in Snowflake syntax; relationships are enforced with primary and foreign‑key constraints; and operational characteristics—tags, masking policies, retention periods, clustering keys, etc.—are laid down so that performance, security, and cost control travel with the DDL.

Much of the journey from logical to physical is mechanical and can be generated by tools like SqlDBM: constraints, data‑type conversions, and even schema‑level naming patterns require little human intervention. Yet the physical layer still hides critical design choices. Architects must decide whether subtype roll‑ups (for example, merging EMPLOYEE and CONTRACTOR into a single PERSON table) will simplify queries or muddy security boundaries; whether clustering keys or materialised views deliver the better latency‑to‑cost ratio; how many compute clusters are needed to meet concurrency SLAs; and what retention settings strike the right balance between risk and storage spend. These are business and usage‑pattern questions, not merely technical ones.

For end users, the pay‑off is tangible: a well‑crafted physical model means predictable, discoverable schemas; dashboards that refresh quickly because micro‑partitions are pruned efficiently; data that obeys row‑level security the moment it lands; and environments that DevOps pipelines can spin up, clone, or roll back in minutes. In short, while the conceptual model defines meaning and the logical model defines rules, the physical model defines reality—it is the layer where data’s business value finally becomes runnable, governable, and performant in Snowflake.

Transformational

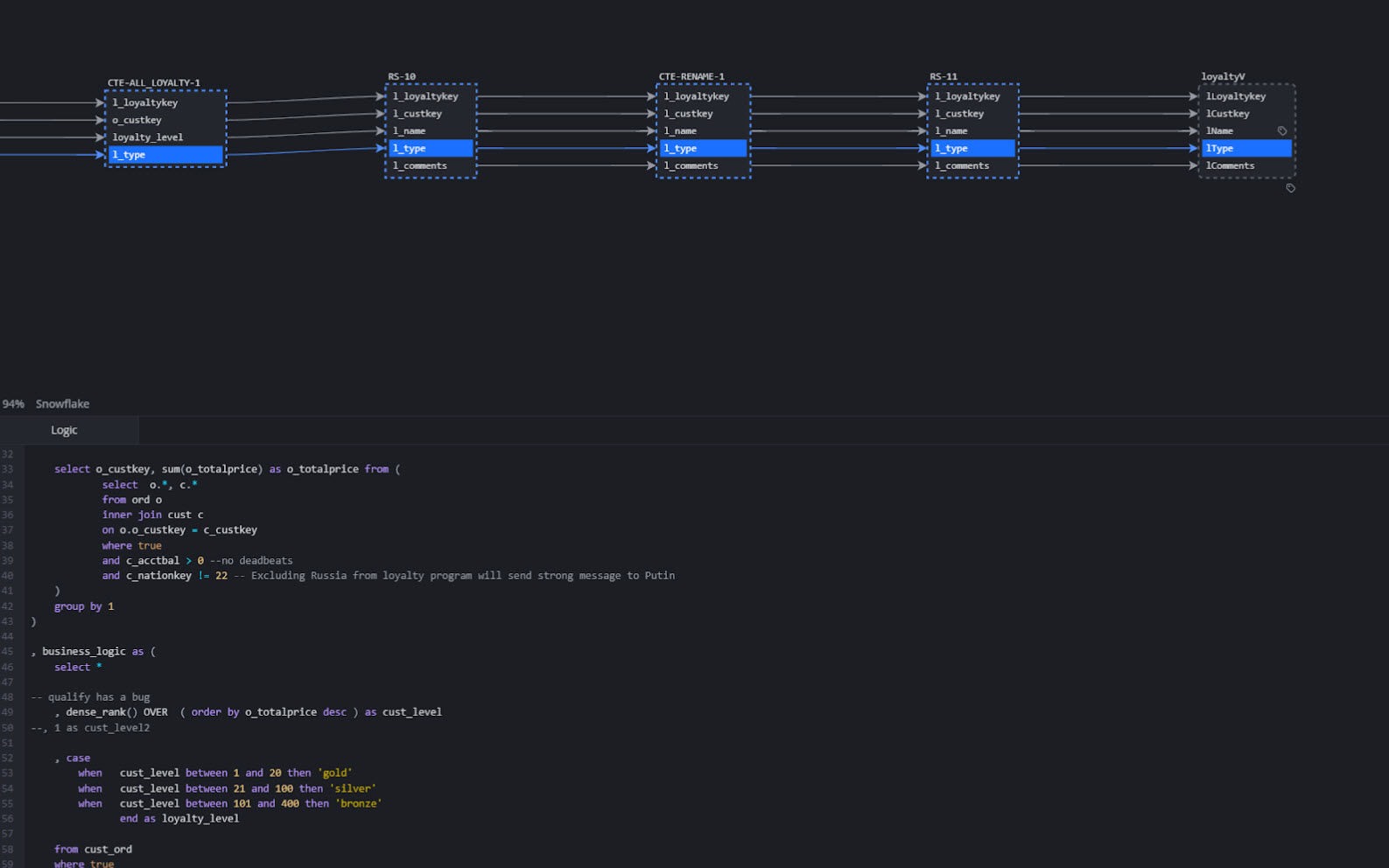

It’s unfortunate that the word logical has already been taken by a class of modeling and can’t be used to express logic-based transformations such as views and CREATE TABLE AS SELECT (CTAS) tables.

Modeling through transformational logic is a powerful and highly maneuverable method for modeling data that comes with one serious drawback: it needs existing data to SELECT from. Transformational modeling is rarely done in transactional databases because, in such systems, data is created and modified through the company’s operational processes (e.g., purchases and profile updates)—with which expensive transformational processes should not compete for critical system resources. However, in data warehouses, where conformed datasets are extracted and duplicated with fresh timestamps for each load, transformational modeling becomes a necessity.

Transformational modeling, unlike relational, isn’t concerned with the structure of the data it is meant to hold because it uses existing data as part of the creation process. Instead, Transformational modeling creates new objects by selecting data from existing sources. This is done through views, using the CREATE TABLE AS SELECT (CTAS) command, or other DML statements like MERGE INTO.

Transformational modeling occurs rapidly. Column names and underlying logic can be adjusted as quickly as a data engineer changes a line of code. However, this ease of creation and subsequent adjustment can lead developers to lose sight of the modeling rigor that has shaped their approach and the source data.

Investing time in relational data modeling before writing data warehouse transformations, such as CTAS (Create Table As Select) or MERGE INTO, ensures a clear and consistent foundation that reflects business logic. This approach enables scalable, reusable, and efficient data pipelines.

By defining well-structured entities and relationships upfront, teams can reduce rework, prevent data silos, and avoid costly misinterpretations. This proactive modeling fosters long-term business value by promoting trusted and governed data—crucial for accurate reporting, compliance, and confident decision-making across the organization.

From Idea to Impact: Modeling as an Enterprise Discipline

Data modeling is more than a technical task—it’s a strategic process that aligns business meaning with technical execution. The journey from conceptual to logical to physical modeling, and finally to transformational modeling, reflects the maturing of an idea into a trusted, performant, and governable data asset. Each layer builds upon the last: the conceptual model anchors design in shared business understanding; the logical model enforces rules and resolves ambiguity; the physical model delivers operational excellence; and the transformational layer adapts these foundations into analytical insights at scale.

By treating modeling not as a one-time design step but as an ongoing, layered practice, organizations create a data landscape that is not only technically sound but also deeply aligned with how the business works—and how it aspires to grow. Whether you’re drafting your first domain diagram or optimizing Snowflake views, approaching each layer with intent and clarity ensures that data becomes an accelerant for value, not a source of confusion. Modeling, done right, is how raw data becomes real insight.