SqlDBM now shows you what dbt can’t — the full story of your data

When it comes to database transformations, the dbt framework is practically an industry standard. Dbt helps teams transform raw data into clean, auditable data assets while incorporating software engineering best practices, such as modularity, testing, and CI/CD, into the analytics layer. But dbt is not without its criticisms. Since its inception, dbt has garnered criticism for its complexity that spirals when left unchecked, its clunky SQL-first approach to dynamic data, and for incorrectly co-opting the word “model”. However, one of dbt’s most glaring gaps—one that arguably keeps it a “transformation tool” rather than a “data platform”—is its lack of visibility in the overall database landscape. Dbt provides great out-of-the-box functionality for visualizing transformational lineage, but makes no attempt to show the relationships between its sources—arguably the most important piece of information for making those transformations possible.

Luckily, SqlDBM has a solution to bridge this visibility gap. But before diving into the solution, let’s discuss the problems that it aims to address.

Blind Spots in the Data Landscape

Dbt projects face two major visibility gaps: the missing context between sources and the lack of visibility into anything that occurs outside dbt. By design, sources must be explicitly declared within dbt. If tables aren’t labeled as sources, they remain invisible to a dbt project. However, even named sources suffer from a critical gap: relationships.

Although dbt sources support features such as freshness checks and metadata, they lack constraints, particularly primary and foreign keys. For example, knowing CUSTOMER_ID is a PK in CUSTOMER and an FK in ORDERS is critical for joining these tables, but dbt constraints are only approximated via descriptions or data tests. This manual effort often results in missing basic info in dbt projects, wasting time searching for relationships or specific attributes for reports.

Another important aspect often missing from dbt is data provenance. While dbt provides some data lineage views, like the project DAG, it does not show upstream or downstream data flows. Even if sources are named in the yaml, there’s no visual indicator of their source system or upstream lineage beyond those sources. Business users typically refer to source systems by specific fields or screens, making it hard to trace how that data reaches the warehouse without a detailed data model of the source system, including ownership or stewardship information.

But it doesn’t have to be this messy. The data team shouldn’t have to choose between flying blind at design time or constantly switching context during their workflow!

SqlDBM + dbt Manifest = One Unified View

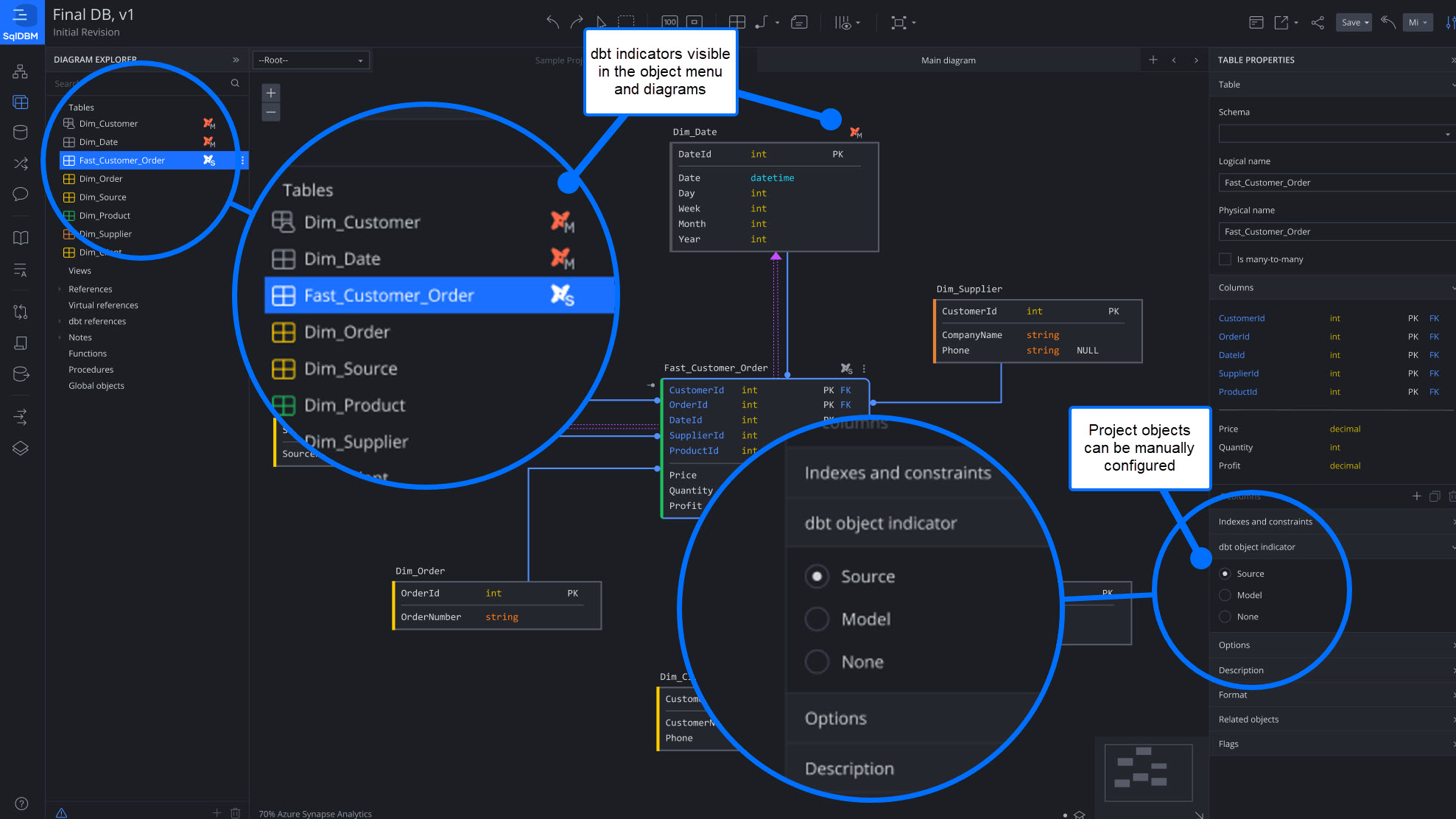

Users have often praised SqlDBM as the intuitive, collaborative data modeling tool that provides the entire organization with access to the big picture of their data landscape. Now, we’re allowing our users to see even further with the dbt manifest upload. The dbt manifest includes all the information needed to identify dbt sources, models, their relationships, and, most importantly, their dbt file path—creating a connection between the fully qualified database object name and the corresponding dbt file. Users can upload the manifest via the UI, automate the import through our API, or work bottom-up and define these properties as part of their design.

With this information, SqlDBM can do things that were never possible before in any other tool!

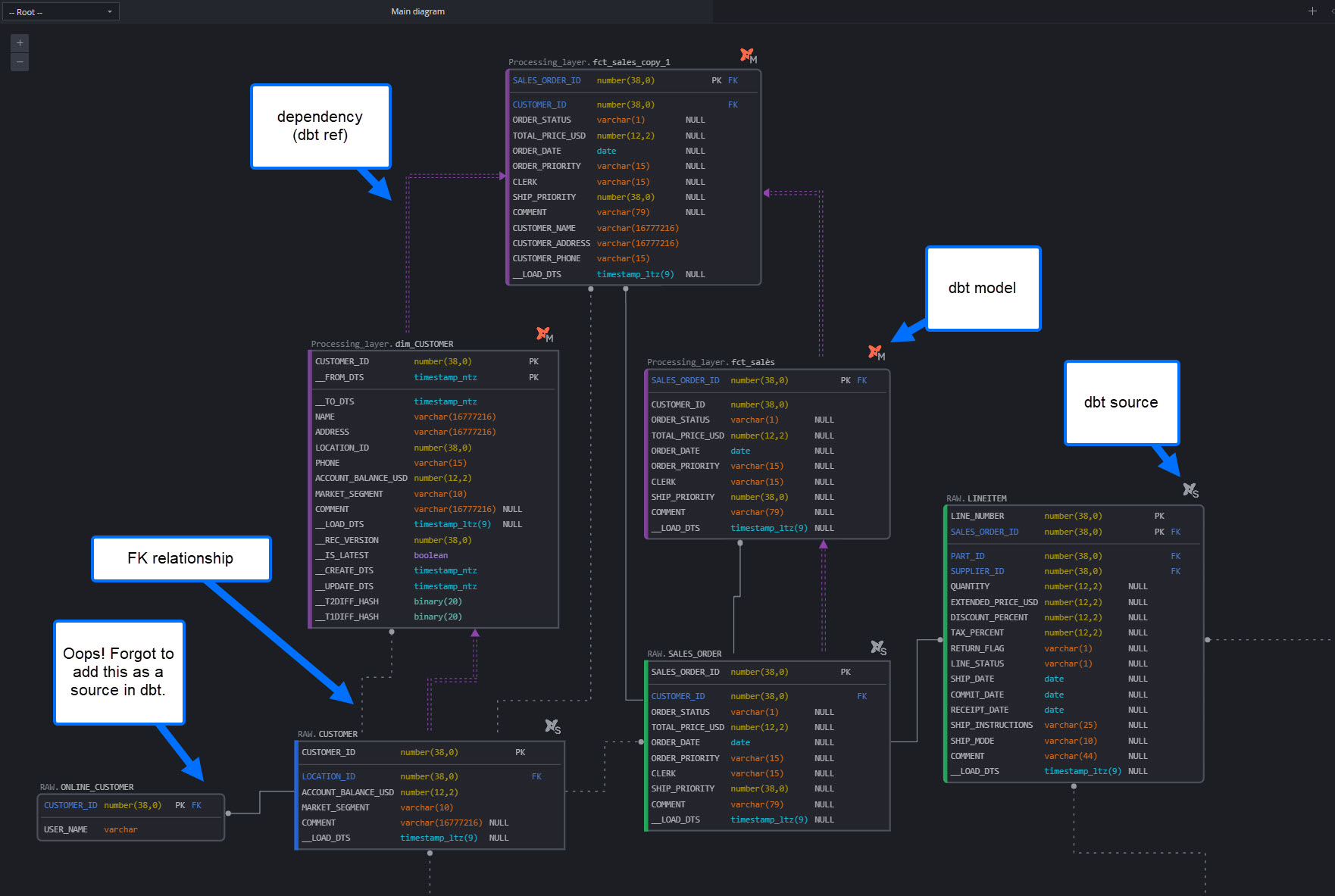

The initial step involves automatically detecting and visually labeling dbt objects on a relational diagram. Using the information in the manifest file, the database objects in a SqlDBM project—identified by their fully qualified names—are mapped to their related sources and models defined in the dbt manifest. This single step gives the team three important pieces of information, never before seen on an ERD:

- Database objects are dbt sources

- Database objects are dbt models

- Database objects are maintained outside of dbt

The following screenshot shows an example of a mixed relational/transformational use case being displayed on the same diagram—the first of its kind! Foreign key relationships are displayed using IDEF1X notation, while transformational dependencies (these would normally be visualized in a DAG) are drawn in a distinctive purple color. Each of these can be toggled on or off in the diagram settings to allow different perspectives or a cleaner look. Of course, metadata, such as related objects and the dbt project path, is also stored as part of the project.

This feature extends beyond visuals by improving existing dbt integrations within SqlDBM. Leveraging dbt object associations from the manifest, SqlDBM can now accurately utilize dbt file paths when generating YAML files. When combined with SqlDBM’s push-to-git feature, these YAML files can directly mirror the dbt project folder structure, streamlining the process of keeping objects perfectly synchronized.

So what does all this functionality add up to?

A single pane of glass for your data landscape

One of the biggest challenges reported by dbt users is project sprawl: the proliferation of database objects growing into an unmanageable web of models, sources, macros, and tests, to the point where no one quite knows how everything connects or why certain things exist. Project sprawl happens primarily due to a lack of visibility, governance, and centralized model lifecycle management. With long, tangled DAGs and a lack of (relational) modeling, it is indeed easy to lose track among hundreds of tables, duplicate logic, and create conflicting conventions between teams or developers.

This is why a single pane of glass for your data landscape is vital for its long-term stability and scalability. Dbt is geared towards action: declarative SQL statements that drive what data transformations and validations are performed. However, without a design-first approach that enforces standards, consistent naming, and logic reuse, users often make decisions on the fly, which adds to project complexity. Fortunately, there’s a better approach!

By centralizing design, planning, and discovery in SqlDBM, users can ensure faster, more consistent, and more reliable transformations. Let’s walk through a typical dbt change request and observe what it looks like with and without a centralized design and governance pane. Suppose our business stakeholders asked for marketing campaign details to be included in the sales reporting table.

dbt-only scenario:

- The data engineer assigned to the task isn’t familiar with marketing data and has to ask around.

- No one on the team has worked with marketing data before, so a follow-up meeting with the business stakeholders is called to clarify the requirements and to source the necessary data assets.

- After some wrangling, the data engineer manages to join the marketing data to the sales data.

- Because so much time was spent on requirements gathering, the engineer does not have the time to write documentation or update the YAML definition of the assets being changed.

- Upon deployment, the business stakeholders report that not all expected campaigns are visible in their report. Existing campaigns are not accurately represented, and some fields are misinterpreted due to naming irregularities.

- The data engineer is reassigned to fix the issues in the next release, and the cycle begins again.

- Ultimately, the data is fixed, but a year later, a data audit reveals that marketing campaign data already exists in another schema, and by then, dependencies make it impractical to deduplicate.

- And so it goes.

A better alternative:

- A data model is maintained in SqlDBM, accessible to everyone, where all sources and transformations are curated to ensure the discoverability of consistent data assets.

- The data engineer searches and finds the marketing campaign data that another team is already loading.

- The data engineer can instantly join this data to the sales report because the foreign keys and conformed dimensions have already been defined.

- After reviewing the naming standards and conventions in SqlDBM, the engineer ensures that the new attributes are consistent with the company’s standards.

- By using SqlDBM to model the changes, the data landscape stays up to date, and the engineer can automatically generate dbt YAML to accompany the changes.

- Business stakeholders consume the data as expected. No mistakes are made, and no rework is required.

With the addition of dbt manifest import, SqlDBM offers a view of the data landscape that’s more comprehensive than ever—covering all sources, models, and dependencies, not just those within a dbt project. This ability helps teams collaborate by clearly visualizing source relationships, origins, and transformations in a single environment. Accurate dbt path mappings allow SqlDBM to automatically generate source and model YAML files in the correct folder structure, ensuring accuracy and consistency. This streamlines workflows by minimizing context switching and manual documentation, enabling teams to design, document, and deploy more efficiently from a single integrated platform. No context switching or manual documentation, just one unified interface.

An accessible visual tool like SqlDBM further reduces barriers for less tech-savvy team members, such as data stewards and governance staff, enabling them to manage and monitor data quality via a user-friendly web interface instead of YAML specs. Additionally, business stakeholders can review and assess designs early, before costly engineering and data transformations are implemented.

Simply put, it’s a better, more efficient way to operate and ensure data transformations are delivered faster, scaling as the enterprise grows.

Conclusion: A New Era of Clarity for dbt Users

SqlDBM now shows you what dbt can’t—the full story of your data. With the addition of dbt manifest import, SqlDBM bridges the gap between transformation logic and the underlying data landscape, creating a unified view that connects design, documentation, and deployment. This feature doesn’t just visualize; it operationalizes clarity, giving every team member—from data engineers to business users—a shared, accurate, and actionable understanding of how data flows across the organization.

The benefits speak for themselves:

- A single pane of glass: View upstream and downstream lineage and relationships across all sources and models.

- A new perspective: Toggle between relational and transformational views or display them without switching context.

- Faster onboarding: New team members instantly grasp the full data flow and dependencies.

- Operational efficiency: No wasted effort—the data model and dbt documentation are automatically generated.

- Seamless collaboration: Bridge the gap between data engineers, modelers, and analysts with a shared visual context.

- A better UX: Low barrier to entry for non-technical users who prefer a visual interface to code.

- Analysis becomes documentation: API-driven import means this can plug into CI/CD pipelines for automated, up-to-date documentation.

These capabilities enable teams to work more quickly, ensure consistency, and make confident, informed decisions. SqlDBM offers the design-first approach that dbt has long required, blending technical accuracy with user-friendly visualization. Are you curious to see what your dbt project looks like when it’s augmented with relational information and the insights it unlocks? Give it a try and find out.

For more information on SqlDBM and dbt integration, check out these related articles:

The Power of Synergy, SqlDBM and dbt

Database Modeling – Relational vs Transformational